Total Integration Testing for software development teams

13/03/2013 - Laurent Eschenauer (@eschnou)

Every morning, I'm scanning through the emails of the night while sipping coffee. One of them always stands out, with its reassuring simplicity: “Integration tests results – No errors”. With just these few words, I know that our code can be built, passes its unit tests, can be packaged, can be deployed on a fresh infrastructure, and continues to work as expected, supporting our customer use cases.

To reach this level of confidence, we have designed a ‘Total Integration Test’ that goes far beyond continuous integration and unit testing, also validating our packaging, deployment and product integration with all external components. In this post, I'll detail how and why we did it.

Why continuous integration is not enough

Continuous Integration (not to be confused with Integration Testing) is a software development best practice which consists of merging all changes from all developers as often as possible and run a set of tests against them (usually a Unit Test suite). Thanks to such a suite, your team can validate that the code can always be built from the latest source and behave correctly, at least at the individual component level.

Although a good start, this is usually not sufficient. In particular, this does not validate that:

- The product works when integrated with all other components (Integration Testing)

- The product can be automatically re-deployed on all targeted platforms (Deployment Testing)

- The product can be packaged for all target platforms (Packaging Testing)

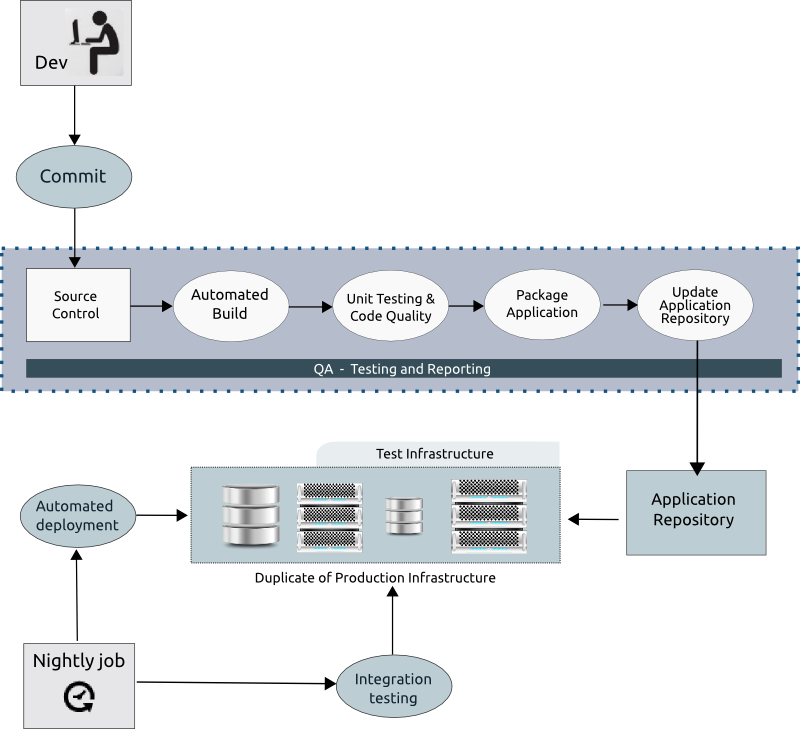

Of course, all software projects may not have these requirements and different teams will have different priorities. Yet, as we will show in the next section, it is quite easy to build a such a test suite with a few simple tools. This process can be run automatically to validate all these points on a daily basis (e.g. at ComodIT we do it nightly). The following is a high-level diagram showing all components of our Total Integration Test Suite.

Prerequisite - Continuous Integration

If you don't have a Continuous Integration environment yet, you should start there. In practice, this means having Source Control in place (e.g. git,mercurial,svn), an Automated Build system, a Unit Test suite, and ideally a server doing the continuous integration for you (e.g. Jenkins, Travis-CI). There is no point doing Integration Testing if you don't have quality unit testing first. The unit test gives you confidence that your components are correct, the integration test will give you confidence that these components can work together.

Step 1 - Automated application packaging

If you are distributing your product (e.g. we also have an on-premise version of ComodIT), then you likely already have one or more packaging (e.g. rpms, debs, msi). By automating this process, you can deliver nightly builds or, even better, continuously build new packages for each new commit on the mainline. In our development process at ComodIT, we are using some Jenkins Post Steps to automatically trigger the build of packages and the update of a development repository.

Step 2 - Automated infrastructure deployment

The second step consists of setting up a process to automatically deploy your infrastructure and application, together with all its dependencies. Such an automated process will also be helpful for running a disaster recovery plan, deploying staging/QA environments on-demand, testing the quality of the deployment process, etc.

Orchestrating the deployment of a multi-tier application and its dependencies is straightforward with ComodIT. In one of our latest example, we showed how to deploy an Openshift Origin broker and multiple nodes. This is a good example of what ‘Integration’ means. Almost a dozen components have to be deployed and integrated to have a fully functional product:

- Bind as DNS server

- PostgreSQL for database server

- RabbitMQ as the messaging server

- MCollective for broker-node communication

- Cross server certificates and keys

- Various application components (broker, node, port-proxy, console, etc.)

When building a product that targets multiple platforms/versions, the testing complexity explodes. Your product may be fine, but will it work with the latest version of the packages available in this or that distribution? This is what an automated deployment will help you to test.

Step 3 - Build an Integration Test

In the case of ComodIT, we have opted for a usage-based integration test suite where we test our platform against real end-to-end use cases. We are leveraging our Python Library to write the tests and to execute them against a testing infrastructure. Using this, we are achieving 100% coverage of API calls and of the library itself.

Such a test suite gives us really high confidence that ‘it works’. Since we are actually executing real-life use cases on the system. In addition, it is easy to extend the suite to expose new bugs and make sure we don't have regressions appearing at a later stage. In the future, we will add a UI testing layer, to run use-cases against our Web UI in a similar fashion.

Step 4 - Putting it all together

Nothing fancy here, just a good old script and a cron job. Since we always have our latest source packaged automatically, we can simply leverage our automated deployment to setup a new infrastructure nightly and then execute the integration tests. An email is sent to the dev team with the list of tests that have failed, or any other kind of issues.

We are using a private cloud for this test, resources consumption is therefore not an issue and we can leave the infrastructure up for the day to do forensics if required. The first step when launching a new test is then to automatically tear down the previous infrastructure.

blog comments powered by Disqus

Recent Posts

-

Three Cool Use Cases for Docker within ComodIT

10 Sep 2013 by Laurent Eschenauer

-

Install server applications in one click directly from the web

15 May 2013 by Laurent Eschenauer

-

Introducing Combox: a simple tool to deploy virtualized development environments

18 Apr 2013 by Laurent Eschenauer